NEXT + Music | Engineering the Future of Music with AI

Members

Overview

The NEXT + Music group stands at the forefront of intelligent music research, pioneering the deep fusion of artificial intelligence with musical creativity, perception, and experience. Our research spans several core areas, including automated music generation, where we design advanced models for lyric-to-melody synthesis and text-to-music composition. We are equally devoted to multimodal music intelligence, exploring the complex interplay between music and other modalities—such as text, images, and video—to enable rich, interactive, and emotionally resonant experiences.

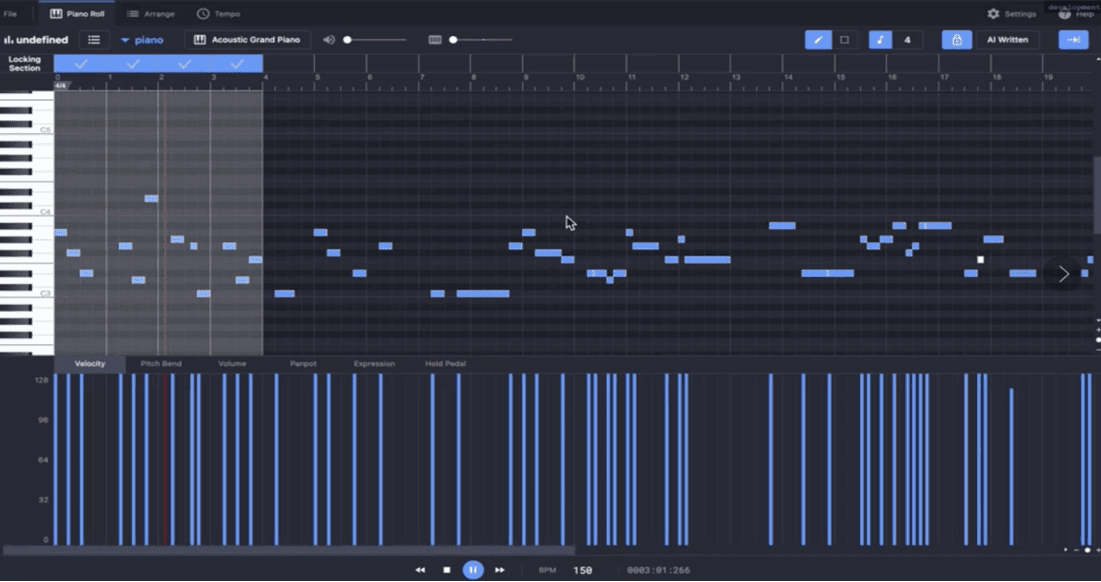

Building upon these foundations, we are expanding our efforts toward AI-driven digital audio workstations (AI-DAWs) that empower artists to collaborate with intelligent systems in real time, spatial music computation that redefines sound creation and perception in immersive environments, and computational music aesthetics that seek to model the subjective and cultural dimensions of musical beauty.

Through these research directions, we aim to create next-generation computational systems that not only understand, generate, and respond to music, but also co-create with humans, inspiring new paradigms of artistic expression and redefining the future of music technology.

Projects

Publications

- Zihao Wang, Le Ma, Chen Zhang, Bo Han, Yunfei Xu, Yikai Wang, Xinyi Chen, HaoRong Hong, Wenbo Liu, Xinda Wu, Kejun Zhang

- Zihao Wang, Kejun Zhang, Yuxing Wang, Chen Zhang, Qihao Liang, Pengfei Yu, Yongsheng Feng, Wenbo Liu, etc.

- Xing, B., Zhang, K*., Sun, S., Zhang, L., Gao, Z., Wang, J., Chen, S.